AI evaluation tools - AI tools

-

Arize Unified Observability and Evaluation Platform for AI

Arize Unified Observability and Evaluation Platform for AIArize is a comprehensive platform designed to accelerate the development and improve the production of AI applications and agents.

- Freemium

- From 50$

-

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.Future AGI is a comprehensive evaluation and optimization platform designed to help enterprises build, evaluate, and improve AI applications, aiming for high accuracy across software and hardware.

- Freemium

- From 50$

-

LastMile AI Ship generative AI apps to production with confidence.

LastMile AI Ship generative AI apps to production with confidence.LastMile AI empowers developers to seamlessly transition generative AI applications from prototype to production with a robust developer platform.

- Contact for Pricing

- API

-

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and Observability

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and ObservabilityFreeplay provides comprehensive tools for AI teams to run experiments, evaluate model performance, and monitor production, streamlining the development process.

- Paid

- From 500$

-

AI Monitor Don’t Remain Blind in the Age of AI!

AI Monitor Don’t Remain Blind in the Age of AI!AI Monitor is a Generative Engine Optimization (GEO) platform helping brands track visibility and reputation across AI platforms like ChatGPT and Google AI Overviews.

- Contact for Pricing

-

Okareo Error Discovery and Evaluation for AI Agents

Okareo Error Discovery and Evaluation for AI AgentsOkareo provides error discovery and evaluation tools for AI agents, enabling faster iteration, increased accuracy, and optimized performance through advanced monitoring and fine-tuning.

- Freemium

- From 199$

-

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.Scorecard.io is an evaluation platform designed for testing and validating production-ready Generative AI applications, including LLMs, RAG systems, agents, and chatbots. It supports the entire AI production lifecycle from experiment design to continuous evaluation.

- Contact for Pricing

-

Mozilla.ai Empowering Developers with Trustworthy AI

Mozilla.ai Empowering Developers with Trustworthy AIMozilla.ai is dedicated to making AI trustworthy, accessible, and open-source, providing tools for developers to integrate and innovate on responsible AI solutions.

- Free

-

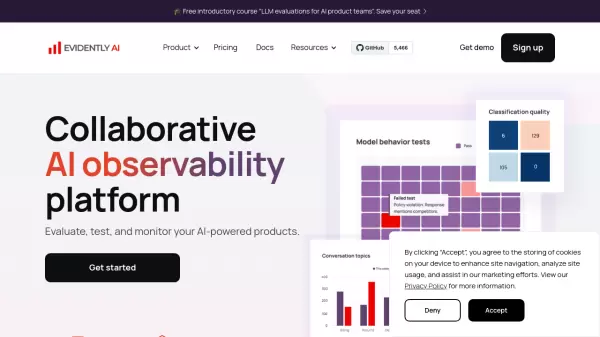

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered products

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered productsEvidently AI is a comprehensive AI observability platform that helps teams evaluate, test, and monitor LLM and ML models in production, offering data drift detection, quality assessment, and performance monitoring capabilities.

- Freemium

- From 50$

-

Weco The AI Research Engineer Turning Benchmarks into Breakthroughs

Weco The AI Research Engineer Turning Benchmarks into BreakthroughsWeco utilizes an AI research engineer, AIDE, to automate code optimization and research through benchmark-driven experimentation, delivering measurable performance improvements.

- Contact for Pricing

-

Lisapet.ai AI Prompt testing suite for product teams

Lisapet.ai AI Prompt testing suite for product teamsLisapet.ai is an AI development platform designed to help product teams prototype, test, and deploy AI features efficiently by automating prompt testing.

- Paid

- From 9$

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

AI Page Ready Optimize Your Website for AI Search and Discovery

AI Page Ready Optimize Your Website for AI Search and DiscoveryAI Page Ready delivers comprehensive AI SEO and discovery analysis to ensure your website is readily understood and featured by AI assistants and large language models.

- Free

-

Relari Trusting your AI should not be hard

Relari Trusting your AI should not be hardRelari offers a contract-based development toolkit to define, inspect, and verify AI agent behavior using natural language, ensuring robustness and reliability.

- Freemium

- From 1000$

-

AI Score My Site Discover your website's AI search engine readiness

AI Score My Site Discover your website's AI search engine readinessAI Score My Site is a specialized tool that evaluates websites for AI search engine optimization and provides actionable insights for improving AI discoverability and ranking potential.

- Free

-

AIDetect The Most Powerful Free AI Detector

AIDetect The Most Powerful Free AI DetectorAIDetect is a comprehensive AI detection platform that offers high-accuracy identification of AI-generated content from various sources like ChatGPT, Google Gemini, and Claude Opus, along with AI text humanization capabilities.

- Freemium

- From 10$

-

The AI Digest Interactive AI Explainers and Demos to Understand AI's Future

The AI Digest Interactive AI Explainers and Demos to Understand AI's FutureThe AI Digest offers interactive explainers and demos showcasing current AI capabilities, helping users understand AI advancements and plan for the future.

- Free

-

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

-

ech0 Hybrid Human-AI Testing for Safer AI Deployments

ech0 Hybrid Human-AI Testing for Safer AI Deploymentsech0 provides comprehensive, scalable testing for AI agents, identifying security vulnerabilities, consistency issues, and policy compliance before production deployment.

- Freemium

-

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & Evaluation

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & EvaluationAutoblocks is a collaborative testing and evaluation platform for LLM-based products that automatically improves through user and expert feedback, offering comprehensive tools for monitoring, debugging, and quality assurance.

- Freemium

- From 1750$

-

AI Detector Detect AI Content and Humanize Text with Precision

AI Detector Detect AI Content and Humanize Text with PrecisionAI Detector offers advanced AI text and image detection to identify content generated by models like ChatGPT, Claude, and Midjourney. It also features a text humanizer to enhance authenticity.

- Freemium

- From 20$

-

Langtrace Transform AI Prototypes into Enterprise-Grade Products

Langtrace Transform AI Prototypes into Enterprise-Grade ProductsLangtrace is an open-source observability and evaluations platform designed to help developers monitor, evaluate, and enhance AI agents for enterprise deployment.

- Freemium

- From 31$

-

Is It AI? AI Detection Made Simple

Is It AI? AI Detection Made SimpleIs It AI? offers quick and accurate AI content detectors for identifying AI-generated images and text. Improve trust and verify authenticity with advanced detection tools.

- Freemium

- From 8$

-

MentionedBy AI Comprehensive AI Brand Monitoring and Competitive Analysis

MentionedBy AI Comprehensive AI Brand Monitoring and Competitive AnalysisMentionedBy AI empowers brands to track, analyze, and optimize their presence across over 20 leading AI models, providing powerful insights for Answer Engine Optimization and AI reputation management.

- Paid

- From 89$

-

Passed.AI Beyond AI Detection: Guide Students to use AI Appropriately

Passed.AI Beyond AI Detection: Guide Students to use AI AppropriatelyPassed.AI is an educational tool that helps educators guide students in responsible AI usage through comprehensive document auditing, AI detection, and plagiarism checking capabilities.

- Free Trial

- From 10$

-

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

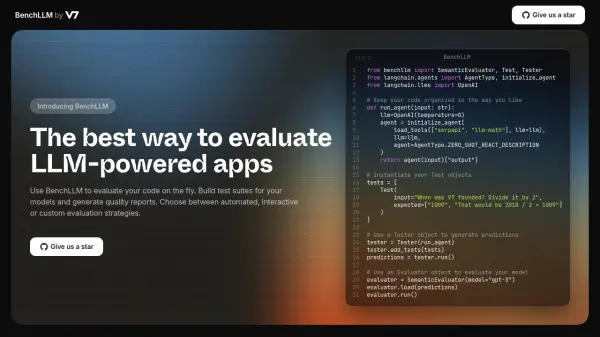

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

marketing link tracking tool 60 tools

-

Slack conversation summarization tool 19 tools

-

AI video retouching tool 60 tools

-

AI nutrition recommendation engine 41 tools

-

AI product description generator for ecommerce 32 tools

-

Create lifestyle product images with AI 25 tools

-

generative AI resource platform 13 tools

-

free image editing tools 27 tools

-

AI tool to convert papers to audio 22 tools

Didn't find tool you were looking for?