LLM comparison tools - AI tools

BenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

LLM Explorer is a comprehensive platform for discovering, comparing, and accessing over 46,000 open-source Large Language Models (LLMs) and Small Language Models (SLMs).

- Free

ModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

Conviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

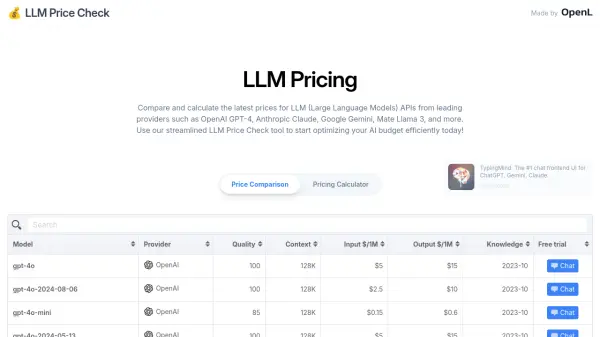

LLM Price Check allows users to compare and calculate prices for Large Language Model (LLM) APIs from providers like OpenAI, Anthropic, Google, and more. Optimize your AI budget efficiently.

- Free

MIOSN helps users find the most suitable and cost-effective Large Language Model (LLM) for their specific tasks by analyzing and comparing different models.

- Free

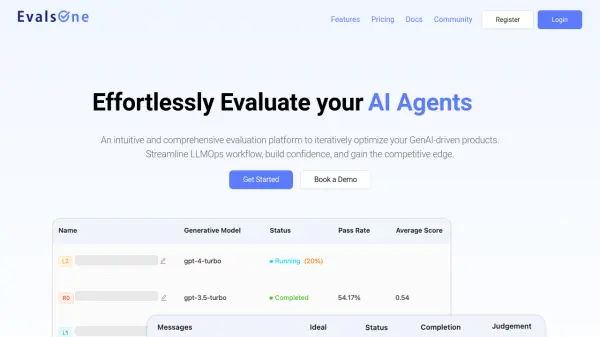

EvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

PromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

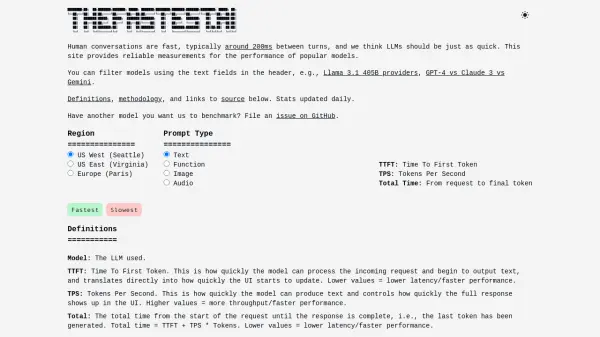

TheFastest.ai provides reliable, daily updated performance benchmarks for popular Large Language Models (LLMs), measuring Time To First Token (TTFT) and Tokens Per Second (TPS) across different regions and prompt types.

- Free

OpenRouter provides a unified interface for accessing and comparing various Large Language Models (LLMs), offering users the ability to find optimal models and pricing for their specific prompts.

- Usage Based

Compare AI Models is a platform providing comprehensive comparisons and insights into various large language models, including GPT-4o, Claude, Llama, and Mistral.

- Freemium

Featured Tools

DeepSwaper

Free AI Face Swap Video & Photo OnlineFoundor.ai

Business Planning, Supercharged by AISpicyGen

Turn your AI Images into Spicy VideosSweetAI

Best NSFW AI: Free Sex Chat, Image Generator, Characters for AdultsMiriCanvas

Complete all your designs with MiriCanvasBestFaceSwap

Change faces in videos and photos with 3 simple clicksSearch Daddie

Discover the Best NSFW AI on the InternetFreebeat.ai

Turn Music into Viral Videos In One ClickKindo

Enterprise-Ready Agentic Security for DevOps and SecOps AutomationDidn't find tool you were looking for?