On-device Large Language Model - AI tools

-

Bodhi Run LLMs locally, powered by Open Source

Bodhi Run LLMs locally, powered by Open SourceBodhi is a free, privacy-focused application allowing users to run Large Language Models (LLMs) locally on their macOS devices without technical setup.

- Free

-

Ollama Get up and running with large language models locally

Ollama Get up and running with large language models locallyOllama is a platform that enables users to run powerful language models like Llama 3.3, DeepSeek-R1, Phi-4, Mistral, and Gemma 2 on their local machines.

- Free

-

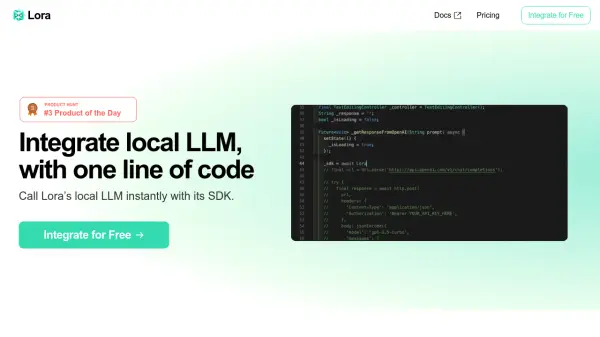

Lora Integrate local LLM with one line of code.

Lora Integrate local LLM with one line of code.Lora provides an SDK for integrating a fine-tuned, mobile-optimized local Large Language Model (LLM) into applications with minimal setup, offering GPT-4o-mini level performance.

- Freemium

-

Kalavai Turn your devices into a scalable LLM platform

Kalavai Turn your devices into a scalable LLM platformKalavai offers a platform for deploying Large Language Models (LLMs) across various devices, scaling from personal laptops to full production environments. It simplifies LLM deployment and experimentation.

- Paid

- From 29$

-

Fullmoon A billion parameters in your pocket - chat with private and local large language models

Fullmoon A billion parameters in your pocket - chat with private and local large language modelsFullmoon is an open-source app that enables users to run local large language models directly on Apple devices, offering completely offline functionality and optimized performance for Apple silicon.

- Free

-

Kolosal AI The Ultimate Local LLM Platform

Kolosal AI The Ultimate Local LLM PlatformKolosal AI is a lightweight, open-source application enabling users to train, run, and chat with local Large Language Models (LLMs) directly on their devices, ensuring complete privacy and control.

- Free

-

ONNX Runtime Production-grade AI engine for accelerated training and inferencing.

ONNX Runtime Production-grade AI engine for accelerated training and inferencing.ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing across various platforms and languages, supporting Generative AI and performance optimization.

- Free

-

WebLLM High-Performance In-Browser LLM Inference Engine

WebLLM High-Performance In-Browser LLM Inference EngineWebLLM enables running large language models (LLMs) directly within a web browser using WebGPU for hardware acceleration, reducing server costs and enhancing privacy.

- Free

-

GGML AI at the Edge

GGML AI at the EdgeGGML is a tensor library for machine learning, enabling large models and high performance on commodity hardware. It's designed for efficient on-device inference.

- Free

-

onedollarai.lol Access Top Large Language Models for Just $1 a Month

onedollarai.lol Access Top Large Language Models for Just $1 a Monthonedollarai.lol provides access to a variety of top-tier large language models (LLMs), including Meta LLaMa 3 and Microsoft Phi, for a flat monthly fee of $1.

- Paid

- From 1$

-

FriendliAI Accelerate Generative AI Inference

FriendliAI Accelerate Generative AI InferenceFriendliAI provides a high-performance platform for accelerating generative AI inference, enabling fast, cost-effective, and reliable deployment and serving of Large Language Models (LLMs).

- Usage Based

-

BrowserAI Run Local LLMs Inside Your Browser

BrowserAI Run Local LLMs Inside Your BrowserBrowserAI is an open-source library enabling developers to run local Large Language Models (LLMs) directly within a user's browser, offering a privacy-focused AI solution with zero infrastructure costs.

- Free

-

Axolotl AI We make fine-tuning accessible, scalable, fun

Axolotl AI We make fine-tuning accessible, scalable, funAxolotl AI is a free, open-source tool designed to make fine-tuning Large Language Models (LLMs) faster, more accessible, and scalable across various AI models and platforms.

- Free

-

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.LlamaEdge is a lightweight and fast local LLM runtime and API server, powered by Rust & WasmEdge, designed for creating cross-platform LLM agents and web services.

- Free

-

Float16.cloud Your AI Infrastructure, Managed & Simplified.

Float16.cloud Your AI Infrastructure, Managed & Simplified.Float16.cloud provides managed GPU infrastructure and LLM solutions for AI workloads. It offers services like serverless GPU computing and one-click LLM deployment, optimizing cost and performance.

- Usage Based

-

Neural Magic Deploy Open-Source LLMs to Production with Maximum Efficiency

Neural Magic Deploy Open-Source LLMs to Production with Maximum EfficiencyNeural Magic offers enterprise inference server solutions to streamline AI model deployment, maximizing computational efficiency and reducing costs on both GPU and CPU infrastructure.

- Contact for Pricing

-

CentML Better, Faster, Easier AI

CentML Better, Faster, Easier AICentML streamlines LLM deployment, offering advanced system optimization and efficient hardware utilization. It provides single-click resource sizing, model serving, and supports diverse hardware and models.

- Usage Based

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

screen time management tool 16 tools

-

AI stock market prediction 9 tools

-

Lead qualification phone AI 60 tools

-

No-code AI agent generator 59 tools

-

Generate Webflow sites with AI 22 tools

-

Cross-platform Markdown software 9 tools

-

employee rewards and recognition platform 13 tools

-

AI monetization platform 45 tools

-

character design AI tool 24 tools

Didn't find tool you were looking for?