BoundaryML - Alternatives & Competitors

An Expressive Language for Structured Text Generation

BoundaryML (BAML) is a language designed for reliable structured text generation from Large Language Models (LLMs). It offers features like JSON error correction, schema coercion, and improved function-calling.

Ranked by Relevance

-

1

LLM Outputs Ensure Your Structured Data is Always Accurate and Reliable

LLM Outputs Ensure Your Structured Data is Always Accurate and ReliableLLM Outputs is an AI tool designed to detect and remediate incorrect structured data generated by Large Language Models (LLMs), ensuring data accuracy and reliability. It validates data formats like JSON, CSV, and XML against specifications to prevent format errors and hallucinations.

- Freemium

-

2

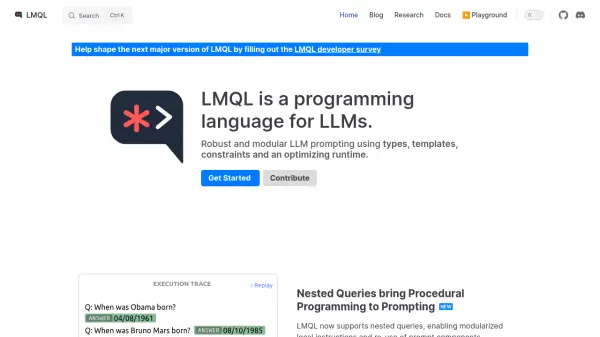

LMQL A programming language for LLMs.

LMQL A programming language for LLMs.LMQL is a programming language designed for large language models, offering robust and modular prompting with types, templates, and constraints.

- Free

-

3

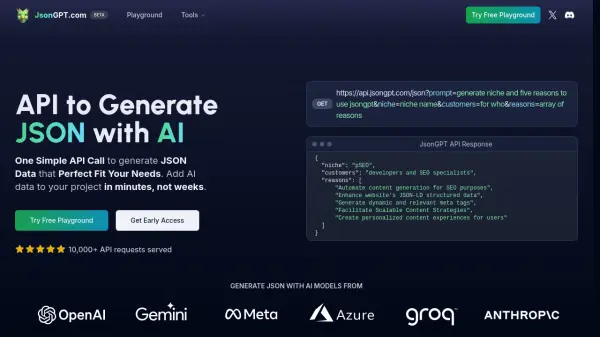

JsonGPT One Simple API Call to generate JSON Data that Perfect Fit Your Needs.

JsonGPT One Simple API Call to generate JSON Data that Perfect Fit Your Needs.JsonGPT offers a simple API to generate structured JSON data using various AI models like OpenAI and Gemini. It streamlines AI integration by handling validation, caching, and streaming.

- Freemium

-

4

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

5

Promptotype The platform for structured prompt engineering

Promptotype The platform for structured prompt engineeringPromptotype is a platform designed for structured prompt engineering, enabling users to develop, test, and monitor LLM tasks efficiently.

- Freemium

- From 6$

-

6

Promptech The AI teamspace to streamline your workflows

Promptech The AI teamspace to streamline your workflowsPromptech is a collaborative AI platform that provides prompt engineering tools and teamspace solutions for organizations to effectively utilize Large Language Models (LLMs). It offers access to multiple AI models, workspace management, and enterprise-ready features.

- Paid

- From 20$

-

7

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

8

docs.mistral.ai Building the Best Open Source Models

docs.mistral.ai Building the Best Open Source ModelsMistral AI is a research lab developing state-of-the-art open-source and commercial Large Language Models (LLMs) for developers and enterprises.

- Other

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?