What is reflection70b.com?

Reflection-70B is an advanced open-source large language model developed to significantly reduce the occurrence of hallucinations in AI-generated content. Built upon the Llama-3.1 framework, it employs a unique technique called Reflection-Tuning. This method introduces special tokens to structure the model's reasoning process, allowing it to critically evaluate and correct its own potential errors before generating an output.

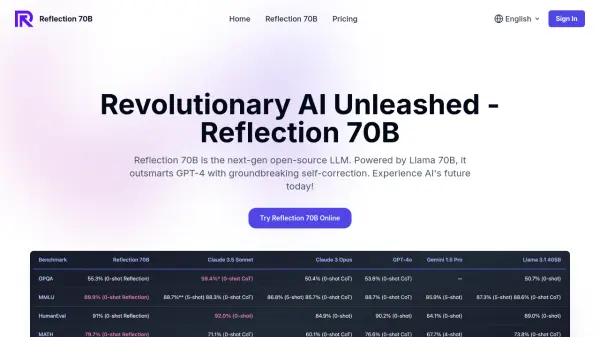

The model was trained on extensive synthetic datasets generated by Glaive, aiming to boost its capabilities in complex natural language processing tasks. Reflection-70B has demonstrated superior performance compared to other prominent models, including GPT-4o, across various academic and reasoning benchmarks like MMLU, MATH, IFEval, and GSM8K. By implementing stricter controls during information verification, it enhances the trustworthiness and reliability of its responses.

Features

-

Architecture: Built on the Llama-3.1 framework with special tokens (

, , - Training Data: Trained on large synthetic datasets generated by Glaive for enhanced NLP performance.

- Performance: Demonstrates superior results on benchmarks like MMLU, MATH, IFEval, and GSM8K, outperforming models like GPT-4o.

- Reduced Hallucinations: Employs strict control mechanisms and Reflection-Tuning to minimize false information generation.

- Open-Source Access: Model weights are available on Hugging Face for community use.

Use Cases

- Generating accurate and reliable text content.

- Developing AI applications requiring high factual accuracy.

- Researching and developing trustworthy AI systems.

- Solving complex problems requiring structured reasoning.

- Building chatbots with reduced misinformation.

FAQs

-

What is Reflection-70B?

Reflection-70B is an advanced open-source language model designed to minimize hallucinations and improve accuracy in AI-generated outputs through a technique called Reflection-Tuning. -

How does Reflection-Tuning work?

Reflection-Tuning teaches the model to detect and correct its own reasoning errors by introducing special tokens like <thinking>, <reflection>, and <output> to structure its thought process. -

What benchmarks does Reflection-70B excel in?

Reflection-70B has demonstrated superior performance across various benchmarks, including MMLU, MATH, IFEval, and GSM8K, outperforming even closed-source models like GPT-4o. -

How does Reflection-70B reduce hallucinations?

By employing stricter control mechanisms during information verification stages, Reflection-70B significantly reduces the generation of false information, enhancing user trust and reliability. -

Where can I access Reflection-70B?

The weights for Reflection-70B are available on Hugging Face, and an API is set to be released through Hyperbolic Labs for easier integration into applications.

Related Queries

Helpful for people in the following professions

reflection70b.com Uptime Monitor

Average Uptime

99.92%

Average Response Time

198.4 ms