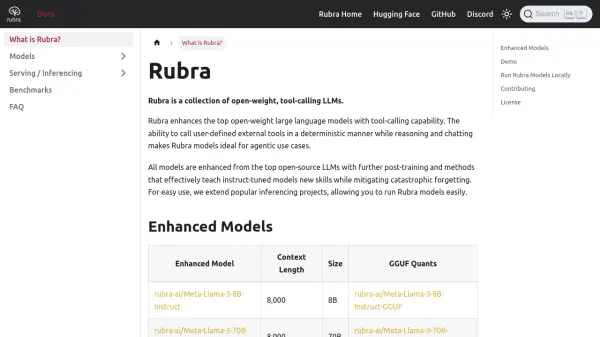

What is Rubra?

Rubra offers a suite of open-weight large language models specifically enhanced for tool-calling functionality. This capability allows the models to interact deterministically with user-defined external tools, making them highly suitable for developing AI agents and complex automated workflows.

The models are derived from leading open-source LLMs (such as Llama 3, Mistral, Gemma, Phi-3, and Qwen2) and undergo further post-training to integrate tool-calling skills while minimizing the loss of their original capabilities. Rubra facilitates easy deployment by extending popular inferencing projects like llama.cpp and vLLM, enabling users to run these enhanced models locally using an OpenAI-compatible tool-calling format.

Features

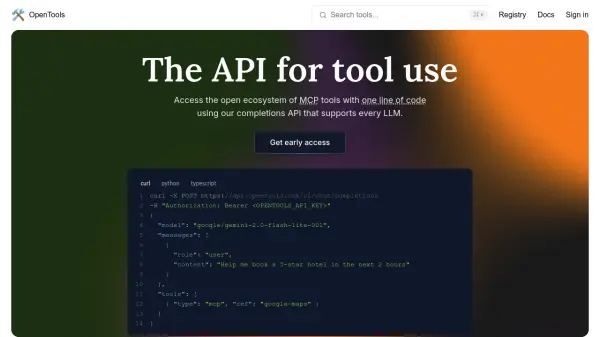

- Tool-Calling Capability: Enhances LLMs to interact with external user-defined tools.

- Open-Weight Models: Based on popular open-source LLMs like Llama 3, Gemma, Mistral, Phi-3, and Qwen2.

- Agentic Use Cases: Ideal for building AI agents that can perform actions.

- Mitigated Catastrophic Forgetting: Post-training methods preserve original model capabilities.

- Local Deployment Support: Extensions for llama.cpp and vLLM for local inferencing.

- OpenAI-Compatible Format: Tool-calling follows a familiar format.

- GGUF Quants Available: Provides quantized versions for efficient deployment.

Use Cases

- Developing AI agents

- Automating workflows requiring external tool interaction

- Integrating LLMs with existing APIs and services

- Creating chatbots with dynamic external data access

- Building custom reasoning engines that leverage external tools

FAQs

-

What models does Rubra enhance?

Rubra enhances models like Meta-Llama-3 (8B & 70B), gemma-1.1-2b-it, Mistral-7B-Instruct (v0.2 & v0.3), Phi-3-mini-128k-instruct, and Qwen2-7B-Instruct. -

How can I run Rubra models locally?

You can run Rubra models locally using extended versions of inferencing tools like llama.cpp and vLLM, following an OpenAI-compatible tool-calling format. -

What license are Rubra models released under?

Rubra enhanced models are published under the same license as their parent model. The Rubra code itself is licensed under Apache 2.0. -

Is there a way to try Rubra without installing anything?

Yes, you can try the models for free without login via the Huggingface Spaces demo. -

Are there any known issues with specific models?

Llama3 models (8B and 70B) may experience degraded function-calling performance with quantization; using vLLM or fp16 quantization is recommended for these models.

Related Queries

Helpful for people in the following professions

Rubra Uptime Monitor

Average Uptime

100%

Average Response Time

147.17 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.