What is Gorilla?

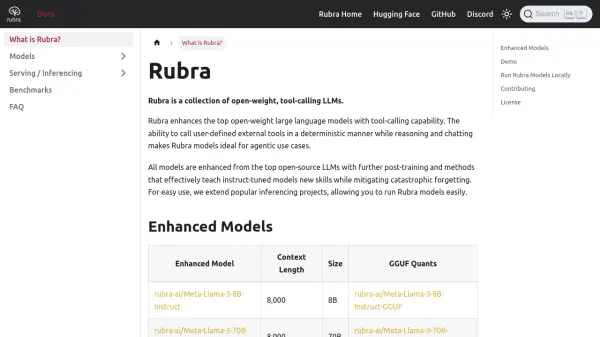

Gorilla is an advanced Large Language Model (LLM) originating from UC Berkeley research, specifically engineered to bridge the gap between LLMs and external services through API interactions. It empowers users and developers by enabling LLMs to accurately and reliably invoke thousands of API calls, effectively expanding their capabilities beyond text generation. The project is open-source, fostering community collaboration and contribution.

The Gorilla ecosystem includes several key components: Gorilla OpenFunctions for teaching LLMs tool use with support for parallel and multiple function calls across various languages (Java, REST, Python); the Berkeley Function-Calling Leaderboard (BFCL) for benchmarking LLM function-calling abilities; RAFT (Retriever-Aware FineTuning) for optimizing LLMs for domain-specific RAG tasks; and GoEX (Gorilla Execution Engine), a runtime environment for safely executing LLM-generated actions like code and API calls with features like 'undo' and 'damage confinement'.

Features

- LLM Connected with Massive APIs: Enables interaction with a wide range of services via API calls.

- Gorilla OpenFunctions: Teaches LLMs tool use, supporting parallel and multiple function calls in Java, REST, and Python.

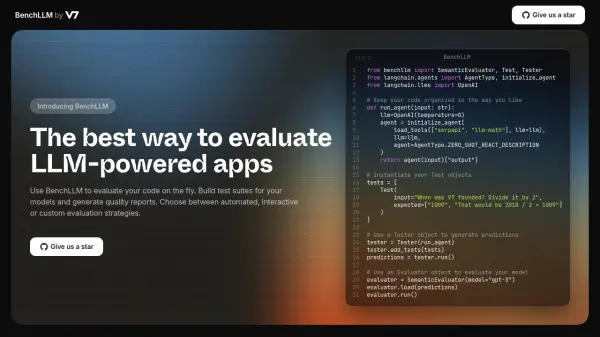

- Berkeley Function Calling Leaderboard (BFCL): Benchmarks the function-calling capabilities of different LLMs.

- RAFT (Retriever-Aware FineTuning): Optimizes LLMs for domain-specific Retrieval-Augmented Generation (RAG).

- GoEX (Gorilla Execution Engine): Provides a runtime for safely executing LLM-generated actions with 'undo' and 'damage confinement' features.

- Open-Source: Apache 2.0 licensed models allowing for commercial use without obligations.

- CLI and Spotlight Search Integration: Offers command-line interface and desktop search capabilities.

Use Cases

- Developing applications where LLMs need to interact with external tools and services.

- Benchmarking and comparing the function-calling abilities of various LLMs.

- Fine-tuning LLMs for specific domains requiring Retrieval-Augmented Generation.

- Creating autonomous agents that can execute code or API calls safely.

- Integrating LLM capabilities directly into CLI workflows or desktop search.

Related Queries

Helpful for people in the following professions

Gorilla Uptime Monitor

Average Uptime

99.71%

Average Response Time

224.57 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.